Automation as a Service — Introducing Scriptflask

Authors : Fazal Allanabanda and Vilas Veeraraghavan

Introduction

Less than a year ago, we shared our testing wins and the challenges that lie ahead while testing High Impact Titles (HITs) globally. All this while ensuring the pace of innovation at Netflix does not slow down. Since then, we have made significant enhancements to our automation. So in this post, we wanted to talk about our evolution since last year.

Our team’s focus still remains on providing test coverage to HITs globally (before and after launch) and ensuring that any A/B test logic is verified thoroughly before test rollout. We achieve these goals using a mix of manual and automated tests.

In our previous post, we spoke about building a common set of utilities — shell/python scripts that would communicate with each microservice in the netflix service ecosystem — which gave us an easy way to fetch data and make data assertions on any part of the data service pipeline. However, we began to hit the limits of our model much faster than we expected. In the following section, we talk about some of the obstacles we faced and how we developed a solution to overcome this.

Scalability

As the scope of our testing expanded to cover a substantial number of microservices in the Netflix services pipeline, the issue of scalability became more prominent. We began creating scripts (shell/python) that would serve as utilities to access data from each microservice and quickly ran into issues. Specifically,

- Ramp up time required to get familiar with microservices and creating scripts for them was non-trivial : each time we created a utility for a new service, we had to spend time understanding what were the most commonly requested items from that service, what the service architecture allowed us to access and how we could request modifications in the exposed data to get to what we wanted for testing. The effort required did not scale.

- Maintaining these scripts over time got expensive : as the velocity of each team responsible for these services varied, it was our responsibility to march alongside them ensuring breakages were addressed immediately and our stakeholders were not impacted. Once again, with our existing team charter and composition, this was an effort that came at great cost (both with resources and time).

There are hundreds of microservices that exist in the Netflix ecosystem and many others that constantly are in some stage of evolution to support a new product idea. We wanted to have a way to create test utilities for an application that can be owned by the corresponding application team members and their test counterparts. This will speed up testing and feature teams can craft the utilities in collaboration with us. We can stay in the loop, but the time and resource investment from our team will be reduced.

Usability

As an integration test team, our focus is not only on creating new tests, but also on making the same tests easy to run for other teams so the product organization as a whole can benefit from the initial effort.

However, this means there should be a common language for these tests so when testers/developers/others are trying to run them, they can easily learn to use the tests and its results for their purposes. Most teams focus on their applications, but with a common set of utilities, we can empower testers to write end-to-end tests with very minimal investment of time. This will speed up the velocity of integration testing and development in general.

But with the shell/python scripts, it needs to be downloaded from the code repository to try out, debugging usually requires a team member from our group and also data returned from each microservice is not formatted the same, making parsing a non-trivial task for whoever uses the scripts.

We have seen a significant benefit already from having other teams use our tools. As an example, during the recent effort to productize thumbs ratings, the QA teams working on the project used many of our utilities to run functional tests before deployment. The MAP team which delivers the landing pages for all device types also has found use for our test utilities to run integration tests. As such, a centralized API will benefit all test teams immensely.

Extensibility

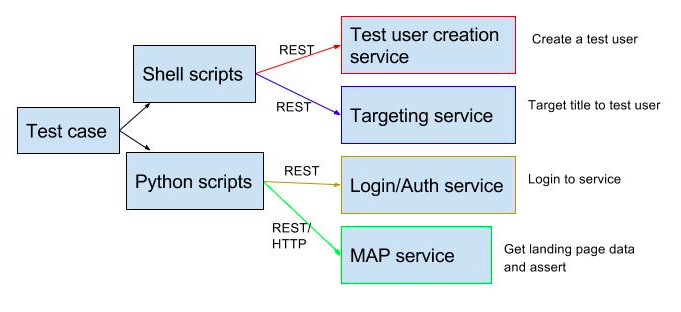

Let’s take an example test case — Verify that a test member is presented with a specific title (that is determined by the algorithm as a match for them) and gets the title in the first 3 rows of their Netflix landing page after login. This simple test sentence is easy to check manually, but when it comes to automation, it is not a single step verification. There are multiple services we will need to probe to run assertions for this test. So, we break it up as shown in the diagram below.

Each of the above steps requires calling a microservice with a specific set of arguments and then parsing the responses. Each of the steps we call a “simple test verb” since each operation is a single step/REST/HTTP call to a service. As illustrated in the diagram — this test case interfaces with 4 different services (indicated by different colors) which means there are 4 points of possible failure in case of an API change.

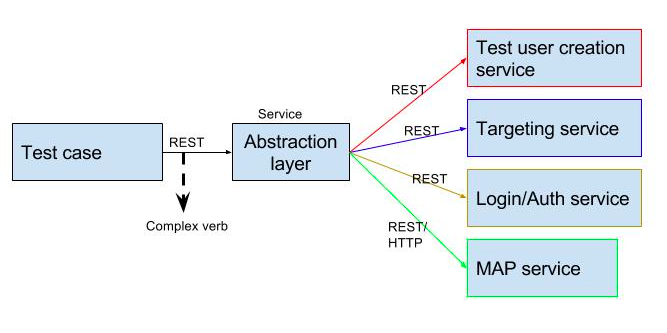

Our aim was to create higher order “complex test verbs” which would have many such verbs called at the same time. This would effectively mean all of the test steps above (which are simple verbs) can be encapsulated by a higher order complex verb that does everything in one step, like this :

- Assert (complex verb) — where complex verb = “3rd row of the landing page for new member (who is targeted for the title) contains the title”

The advantage of creating complex test verbs is that it allows integration testers to write very specific tests that can be reused in simple ways, e.g. by developers to verify bug fixes, by other teams to assert specific behavior that they themselves do not have knowledge about/visibility into. Our existing approach had too many obstacles to create these verbs and reach the level of extensibility we wanted from the test framework. We needed an abstraction layer that will allow us to get to our goal.

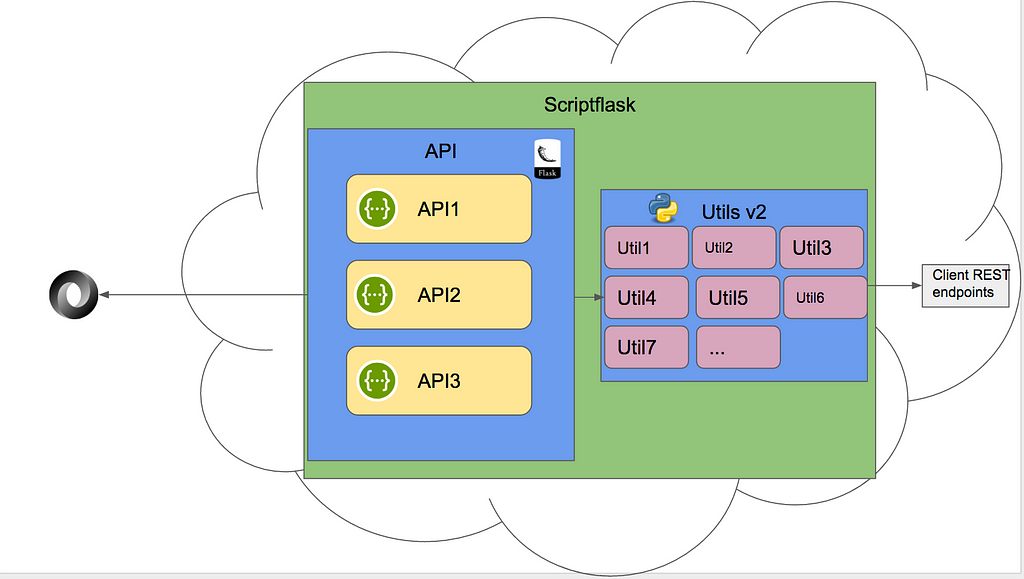

Introducing Scriptflask

To overcome the scalability, usability and extensibility drawbacks we encountered, we refactored our framework into a first order application in the Netflix ecosystem. Functionally, we had great success with the approach of automating against REST endpoints. Our intention was to further build on this model — thus, Scriptflask served as a logical next step for our test automation. Our existing set of python utility scripts took in a set of parameters, and performed one function in isolation. At its core, Scriptflask is an aggregation of these utilities exposed as REST endpoints.

How did we get here?

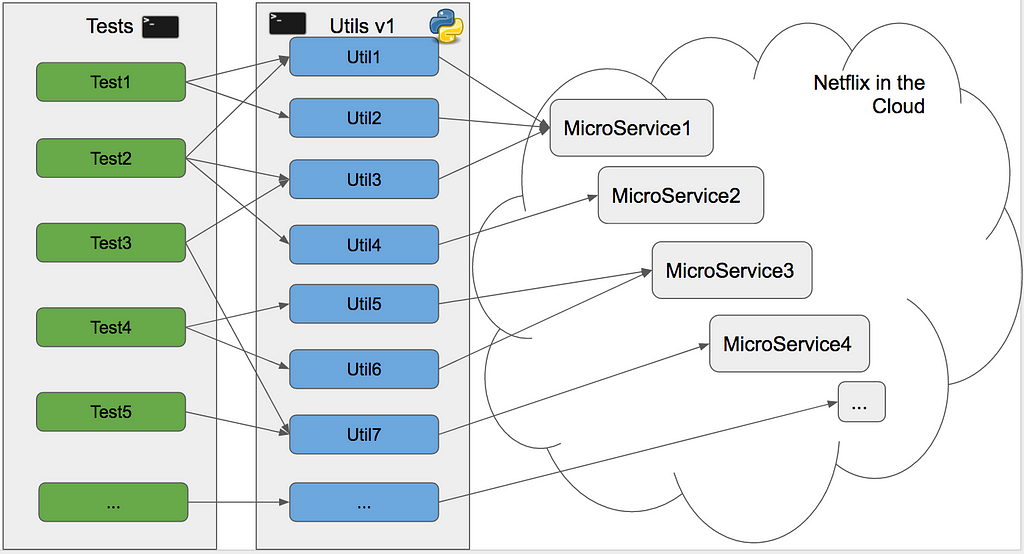

Our infrastructure used to look like this:

Our test cases were shell scripts which depended on utilities that would perform one function each. Those utilities then accessed individual microservices and retrieve or modify data for users. In order to get more teams at Netflix to use these utilities, we considered two options:

- Publish the utilities as a library that could be picked up by other teams

- Expose the utilities as a REST endpoint

We went with option 2 for the simple reason that it would eliminate the need for version conflicts, and would also eliminate the need for retrieving any of our code, since all interaction could be achieved via http calls.

Why Flask?

We used the Flask web server to front our python scripts.

Our primary requirement was a REST API, and thus we had no interest in building a UI, or have any use for databases, Flask worked perfectly for us. Flask is also utilized by multiple Python applications at Netflix, providing us with easy in-house support. We looked at Django and Pyramid as well, and eventually went with Flask — which offered us the most adaptability and ease of implementation.

Scriptflask consists of two components:

- Utilities — these are the utilities from our test automation, refactored as python functions and containing more business logic, powering our strategy of creating “complex test verbs”

- API — this is an implementation of the Flask web server. It consists of input verification, service discovery, self tests and healthchecks, and exposes the functionality of the utilities as REST endpoints, returning data formatted as json.

Benefits of Scriptflask

Scalability

Scriptflask is scalable because adding to it is easy. Each application owner can add a single utility that interacts with their application, and doing so is relatively simple. It took one of our developers a day to obtain our source code, deploy locally, and implement REST endpoints for a new microservice that they implemented.

This significantly reduces test implementation time. As the integration team, we can focus on executing tests and increase our test coverage this way.

As a first order application in the Netflix ecosystem, we also take advantage of being able to easily schedule deployments, scaling and Continuous Integration using Spinnaker.

Usability

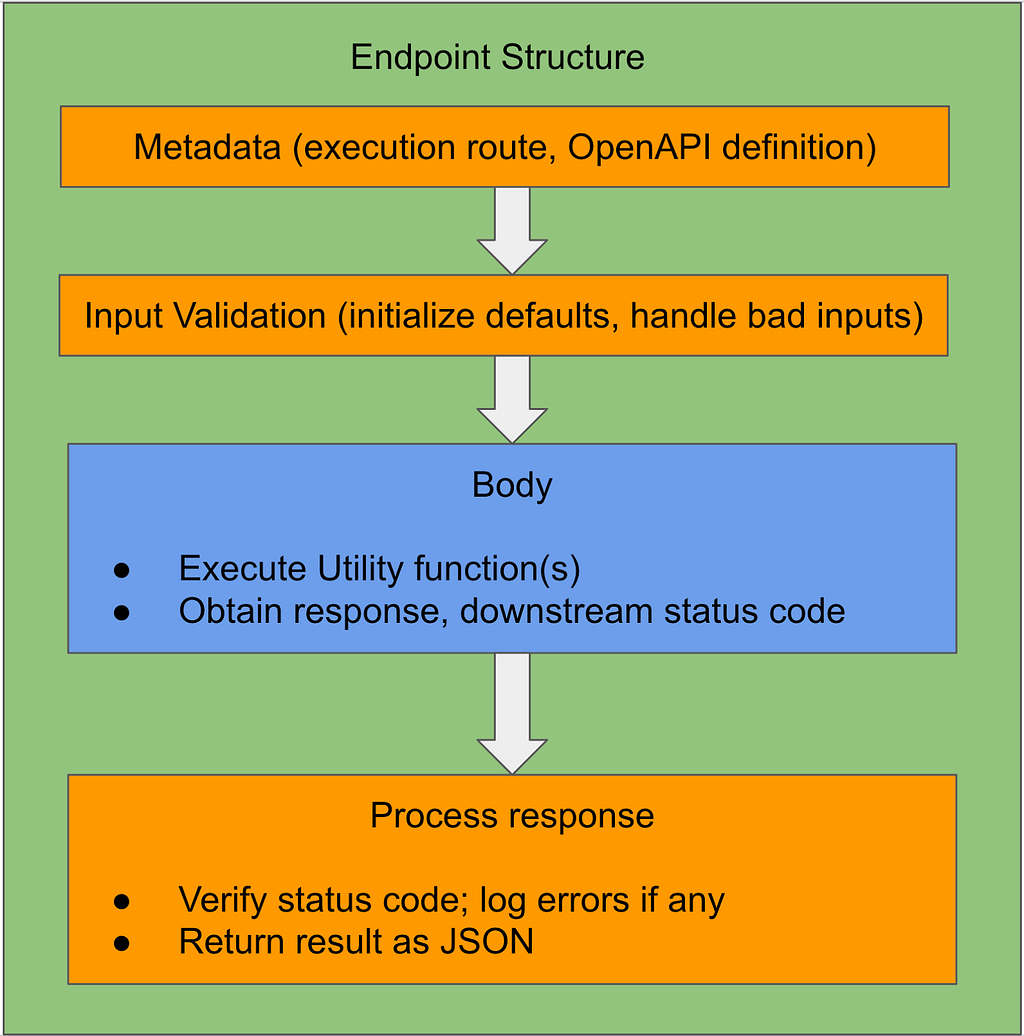

Scriptflask makes it very easy to get started in order to implement a REST endpoint — all logging, error handling, data encoding is handled right out of the box, requiring very little custom code to be written. An endpoint is quite straightforward to implement, with the request body being the only component that needs to be implemented — the remainder comes for free.

A significant challenge we encountered with our previous iteration of test automation was keeping track of the utilities we had implemented as a team. This is one reason that we included OpenAPI specifications as a must-have feature, when we were gathering requirements for Scriptflask. The OpenAPI implementation makes it much easier for users of Scriptflask to execute operations as well as identify any missing endpoints.

Extensibility

Another challenge that we faced with our previous implementation was that other teams weren’t as willing to use our test automation because there was a learning curve involved. We wanted to mitigate this by providing them an easier way to obtain certain tests by converting entire test cases into a REST endpoint that would execute the test, and in the response return the data that was generated or manipulated, as well as the results of any validation.

This has now enabled us to expose multiple back-end verification tests for our High Impact Titles (HITs) as REST endpoints to the front-end teams. We have also been able to expose end-to-end tests for one of our microservices as a series of REST endpoints.

Another advantage of exposing our test cases as REST endpoints is that they’re language-agnostic, and this enables multiple teams working with different frameworks to incorporate our test cases.

Other advantages of using Scriptflask

Reduced complexity

Scriptflask significantly reduces the complexity of our integration tests. Each test case is now a simple sequence of REST calls. In some cases, the test itself is a single “complex verb” which can be executed as one step. For a client of Scriptflask, using it is no different that using any other service REST endpoint. For those interested in contributing, installing and getting started with Scriptflask and our integration tests has now become a clean, two step install and a person with minimal knowledge of python can get up and running in less than ten minutes.

This helps onboard new testers very quickly to our test framework and lowers the barrier to start contributing to the test effort. We have been able to draw in developers and testers from a variety of feature teams to contribute to Scriptflask and has resulted in a lot of active discussion regarding tests and tools which spurs on more innovation and creativity.

Navigate around Reliability/availability issues for services

Netflix microservices are deployed in multiple AWS regions. Using Scriptflask, we were able to accomplish something we would have never achieved efficiently in our old system — Fallback from one region to another for all services that are in active-active deployments. We have also built accommodation for when the reliability team runs chaos exercises that impact services.

The advantage we gain with Scriptflask is that we are able to make intelligent decisions on routing without the tester having to learn and grasp additional logic. This helps relieve the tester from having the responsibility of fighting external variabilities in the system that could have made the test fragile/flaky.

Speed of test execution

As we started moving tests to use Scriptflask, we found a significant decrease in test execution times. In one case, we saw the test execution time drop from 5 mins to 56 seconds. This happened because the complicated filtering and processing logic were delegated to Scriptflask where we were able to optimize many of the most convoluted test steps and that resulted in time savings. This increase in speed results in making the tests focus on assertions which are quick and simple to fetch and verify instead of chasing down false positives caused by systemic inefficiencies.

While we did not plan on improving test execution speed as an initial aim, it was a welcome benefit from the whole exercise.

Future enhancements

Our short-term objective is to promote Scriptflask within the organization and make testing all aspects of the Netflix service pipeline straightforward and intuitive to learn. To do this we will have to:

- Enlist: Involve other feature teams in the development process so they can witness the benefits for themselves, and create a sense of ownership which will generate a virtuous circle of adoption and participation

- Enhance: Create tools to auto-generate scaffolding for new endpoints and new services. This will further shrink the barriers to getting started, helping on-board engineers faster.

- Expand: Integration with Spinnaker pipelines will expand Scriptflask’s scope by enabling feature teams to expand their test coverage by running end-to-end tests as part of canary/test deployments.

In addition to enhancements that are Netflix focused, we are also considering open sourcing some aspects of Scriptflask.

In the long term, more challenges lie ahead of us. We’re always exploring new ways to test and improve the overall velocity of adding new features and accelerating the rollout of A/B tests. If this has piqued your interest, and you wish to join us on this mission, we want to hear from you. Exciting times are ahead!

Automation as a Service — Introducing Scriptflask was originally published in Netflix TechBlog on Medium, where people are continuing the conversation by highlighting and responding to this story.