If you are interested in pushing the frontier forward in the recommender systems space, take a look at some of our relevant open positions!

今天在微博上看到了 有人分享了下面的这段函数式代码,我把代码贴到下面,不过我对原来的代码略有改动,对于函数式的版本,咋一看,的确令人非常费解,仔细看一下,你可能…

Ubuntu 从16.04 (AMD64架构上)开始正式支持ZFS作为数据文件系统(非root)。但是需要另外安装。过程非常简单: sudo apt install zfs zfsutils-linux 验证是否ZFS是否已经安装并加载: $ lsmod | grep zfs zfs 2801664 11 zunicode 331776 1 zfs zcommon 57344 1 zfs znvpair 90112 2 zfs,zcommon spl 102400 3 zfs,zcommon,znvpair zavl 16384 1 zfs 作为一个非常NB的文件系统,ZFS主要的特性有: 快照 写时复制(copy-on-write…

可达书院建立的初衷就是为了做(在线)IT培训。自它建立以来,我也一直在思考在线培训究竟要怎么做才能有效率。就像那种面对面,一对一的培训一样的效率。 刚开始的想法(2008年左右)就是录制培训视频,通过让感兴趣者通过在线观看视频来学习。这种想法的出发点是,在IT技术学习中,尤其是偏重实战的技能,视频讲解比文字叙述更有效,更容易理解。 于是经过一年多的时间,录制了一套OpenBSD绝对新手入门的视频教程。 不知不觉又过了几年,开始觉得这样还不够。如果能一边看视频,一边练习所讲的知识点,这样效果会更好一点。于是希望将原来单纯播放视频的界面改为左边播放视频,右边是一个命令行界面。这样就可以一边观看,一边练习了。但是作为系统管理员出身的我,编程并不是强项。这个想法很久都未能实现。 后来又录制了OpenBSD快速入门视频,针对有一定Linux/Unix经验的朋友。 后来一个偶然的机会遇到了实验楼这个网站,感觉这个差不多就是我想要的样子,虽然它的教程以文字为主。于是我也在那里注册了一个账号,学习了几个课程。慢慢觉得这种方式还是有些美中不足,虽然相比纯粹观看视频的方式有了不少的进步。对我来说,这个不足主要在于:一,不能以我想要的速度进行学习(有时候想要快速浏览,有时候想要慢慢思考或者状态不佳,无力以正常速度学习);二,有困惑的时候没有人指点迷津; 于是我继续思考,究竟什么样的学习方式(或者培训方式)才能让效率最大化? 对于我个人来说,学习进度上最大的问题在于,当你有困惑的时候没有人给你指点迷津。你需要停下来,去搜索,去思考,去回头复习过去的内容,想要知道是否遗漏了什么东西。可能过了很长时间,你依然没能解开心中的疑问,学习进程就此被耽搁,或不得不带着这个疑问继续,以致于后面的学习效果大打折扣。 那么,如何解决这个问题? 目前我能想到的方案,就是学习时有个一对一的辅导老师,或者一个领路者。这个人知道你大概的经验,了解你对学习目标技术的了解程度,而他/她又这方面的实际经验。当你有疑惑的时候可以马上咨询,解开心中疑团(虽然有时候未必100%能够做到这一点)。面对面的答疑解惑是最好的,其次就是借助实时网络视频。 而在这几年里,随着虚拟化、云计算和容器技术的出现,出现了很多面向云计算/容器类的工具和平台,如较早一点的OpenStack,新一点的Kubernetes,Mesos,fleet以及SmartOS/Triton,CoreOS系统等等。这些东西注定要改变系统管理员(或者称为DevOps或CloudOps)的工作方式。 我自己也一直试图跟上这个趋势。为了学习这些新的平台和工具,自己也看了不少的视频,读了很多的文档/电子书,也花了很多时间折腾这些东西。在整个过程当中,我也感觉到观看视频未必就是最好的方式。有的时候你需要一本书,最好是纸质书,这样你可以快速浏览,一目十行,或逐字逐句,慢慢思考;有时候你需要视频,可以看到实际操作时究竟是什么样子(这个时候文字表达相对就比较无力了)。 还有一点就是,如果是视频,最好是未编辑修饰过的。我自己录制视频时如果出现意外情况,我就只能暂停录制,然后停下来排错,完成后再接着录。我觉得很多时候这个排错的过程也是一个非常宝贵的学习素材,让学习者能更深入的去了解这个技术的工作机制。只是这个过程可能需要很长的时间,不太适合视频录制。

We are pleased to announce a significant upgrade to one of our more popular OSS projects. Chaos Monkey 2.0 is now on github!

Years ago, we decided to improve the resiliency of our microservice architecture. At our scale it is guaranteed that servers on our cloud platform will sometimes suddenly fail or disappear without warning. If we don’t have proper redundancy and automation, these disappearing servers could cause service problems.

The Freedom and Responsibility culture at Netflix doesn’t have a mechanism to force engineers to architect their code in any specific way. Instead, we found that we could build strong alignment around resiliency by taking the pain of disappearing servers and bringing that pain forward. We created Chaos Monkey to randomly choose servers in our production environment and turn them off during business hours. Some people thought this was crazy, but we couldn’t depend on the infrequent occurrence to impact behavior. Knowing that this would happen on a frequent basis created strong alignment among our engineers to build in the redundancy and automation to survive this type of incident without any impact to the millions of Netflix members around the world.

We value Chaos Monkey as a highly effective tool for improving the quality of our service. Now Chaos Monkey has evolved. We rewrote the service for improved maintainability and added some great new features. The evolution of Chaos Monkey is part of our commitment to keep our open source software up to date with our current environment and needs.

Integration with Spinnaker

Chaos Monkey 2.0 is fully integrated with Spinnaker, our continuous delivery platform.

Service owners set their Chaos Monkey configs through the Spinnaker apps, Chaos Monkey gets information about how services are deployed from Spinnaker, and Chaos Monkey terminates instances through Spinnaker.

Since Spinnaker works with multiple cloud backends, Chaos Monkey does as well. In the Netflix environment, Chaos Monkey terminates virtual machine instances running on AWS and Docker containers running on Titus, our container cloud.

Integration with Spinnaker gave us the opportunity to improve the UX as well. We interviewed our internal customers and came up with a more intuitive method of scheduling terminations. Service owners can now express a schedule in terms of the mean time between terminations, rather than a probability over an arbitrary period of time. We also added grouping by app, stack, or cluster, so that applications that have different redundancy architectures can schedule Chaos Monkey appropriate to their configuration. Chaos Monkey now also supports specifying exceptions so users can opt out specific clusters. Some engineers at Netflix use this feature to opt out small clusters that are used for testing.

|

| Chaos Monkey Spinnaker UI |

Tracking Terminations

Chaos Monkey can now be configured for specifying trackers. These external services will receive a notification when Chaos Monkey terminates an instance. Internally, we use this feature to report metrics into Atlas, our telemetry platform, and Chronos, our event tracking system. The graph below, taken from Atlas UI, shows the number of Chaos Monkey terminations for a segment of our service. We can see chaos in action. Chaos Monkey even periodically terminates itself.

|

| Chaos Monkey termination metrics in Atlas |

Termination Only

Netflix only uses Chaos Monkey to terminate instances. Previous versions of Chaos Monkey allowed the service to ssh into a box and perform other actions like burning up CPU, taking disks offline, etc. If you currently use one of the prior versions of Chaos Monkey to run an experiment that involves anything other than turning off an instance, you may not want to upgrade since you would lose that functionality.

Finale

We also used this opportunity to introduce many small features such as automatic opt-out for canaries, cross-account terminations, and automatic disabling during an outage. Find the code on the Netflix github account and embrace the chaos!

-Chaos Engineering Team at Netflix

Lorin Hochstein, Casey Rosenthal

As per undeadly.org: With a small commit, OpenBSD now has a hypervisor and virtualization in-tree. This has been a lot of hard work by Mike Larkin, Reyk Flöter, and many others. VMM requires certain hardware features (Intel Nehalem or later,…

Introduction

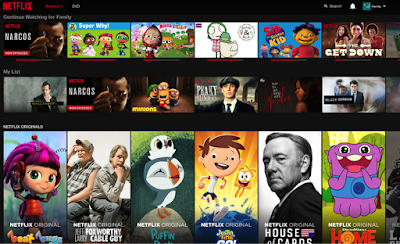

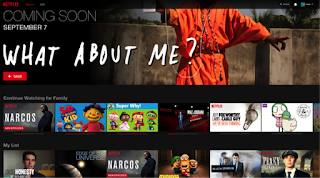

When a member opens the Netflix website or app, she may be looking to discover a new movie or TV show that she never watched before, or, alternatively, she may want to continue watching a partially-watched movie or a TV show she has been binging on. If we can reasonably predict when a member is more likely to be in the continuation mode and which shows she is more likely to resume, it makes sense to place those shows in prominent places on the home page.

Continue Watching

-

Improve the placement of the row on the page by placing it higher when a member is more likely to resume a show (continuation mode), and lower when a member is more likely to look for a new show to watch (discovery mode)

- Improve the ordering of recently-watched shows in the row using their likelihood to be resumed in the current session

-

is in the middle of a binge; i.e., has been recently spending a significant amount of time watching a TV show, but hasn’t yet reached its end

-

has partially watched a movie recently

-

has often watched the show around the current time of the day or on the current device

-

has just finished watching a movie or all episodes of a TV show

-

hasn’t watched anything recently

-

is new to the service

Building a Recommendation Model for Continue Watching

-

Member-level features:

-

Data about member’s subscription, such as the length of subscription, country of signup, and language preferences

-

How active has the member been recently

-

Member’s past ratings and genre preferences

-

Features encoding information about a show and interactions of the member with it:

-

How recently was the show added to the catalog, or watched by the member

-

How much of the movie/show the member watched

-

Metadata about the show, such as type, genre, and number of episodes; for example kids shows may be re-watched more

-

The rest of the catalog available to the member

-

Popularity and relevance of the show to the member

-

How often do the members resume this show

-

Contextual features:

-

Current time of the day and day of the week

-

Location, at various resolutions

- Devices used by the member

Two applications, two models

Show ranking

Row placement

Reusing the show-ranking model

Dedicated row model

Tuning the placement

Context Awareness

Serving the Row

Conclusion

Are you tantalized by Systems We Love but you don’t know what proposal to submit? For those looking for proposal guidance, my advice is simple: find the love. Just as every presentation title at !!Con must assert its enthusiasm by ending with two bangs, you can think of every talk at Systems We Love as beginning with an implicit “Why I love…” So instead of a lecture on, say, the innards of ZFS (and well you may love ZFS!), pick an angle on ZFS that you particularly love. Why do you love it or what do you love about it? Keep it personal: this isn’t about asserting the dominance of one system—this is about you and a system (or an aspect of a system) that you love.