Introduction

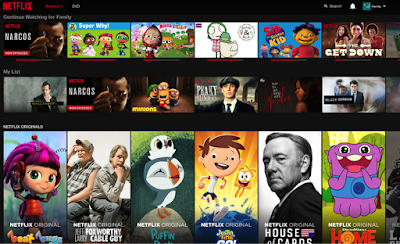

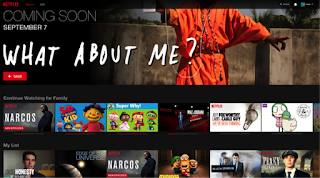

When a member opens the Netflix website or app, she may be looking to discover a new movie or TV show that she never watched before, or, alternatively, she may want to continue watching a partially-watched movie or a TV show she has been binging on. If we can reasonably predict when a member is more likely to be in the continuation mode and which shows she is more likely to resume, it makes sense to place those shows in prominent places on the home page.

Continue Watching

-

Improve the placement of the row on the page by placing it higher when a member is more likely to resume a show (continuation mode), and lower when a member is more likely to look for a new show to watch (discovery mode)

- Improve the ordering of recently-watched shows in the row using their likelihood to be resumed in the current session

-

is in the middle of a binge; i.e., has been recently spending a significant amount of time watching a TV show, but hasn’t yet reached its end

-

has partially watched a movie recently

-

has often watched the show around the current time of the day or on the current device

-

has just finished watching a movie or all episodes of a TV show

-

hasn’t watched anything recently

-

is new to the service

Building a Recommendation Model for Continue Watching

-

Member-level features:

-

Data about member’s subscription, such as the length of subscription, country of signup, and language preferences

-

How active has the member been recently

-

Member’s past ratings and genre preferences

-

Features encoding information about a show and interactions of the member with it:

-

How recently was the show added to the catalog, or watched by the member

-

How much of the movie/show the member watched

-

Metadata about the show, such as type, genre, and number of episodes; for example kids shows may be re-watched more

-

The rest of the catalog available to the member

-

Popularity and relevance of the show to the member

-

How often do the members resume this show

-

Contextual features:

-

Current time of the day and day of the week

-

Location, at various resolutions

- Devices used by the member

Two applications, two models

Show ranking